Interpreting mLaP Results

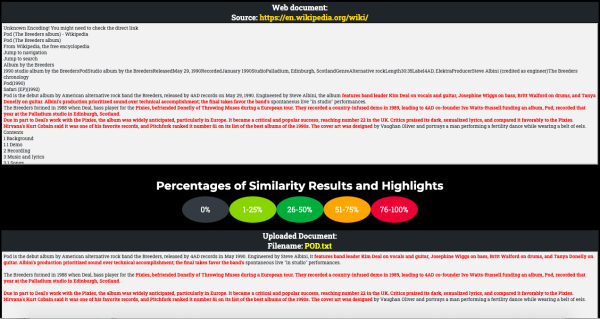

Is this piece plagiarized? The moodLearning anti-plagiarism service (mLaP) provides the information that helps the teacher determine whether a submitted piece has been plagiarized. It comes in a form of percentages of similarities, ranging from 0 to 100%.

mLaP highlights the parts showing the matches. The red highlight, for instance, is for 75 to 100% similarities.

Contextual Info

In certain cases, determining whether the submitted document is plagiarized may require certain contextual information, like info on its readability, whether the document is dealing with the topic head on, or whether the submitter might have worked on the document rather “too quickly” (given the allotted time). Below are some of the tools (readability, word cloud, other word statistics) we thrown in for teachers and evaluators to use as background info. These tools are integrated in the mLaP service.

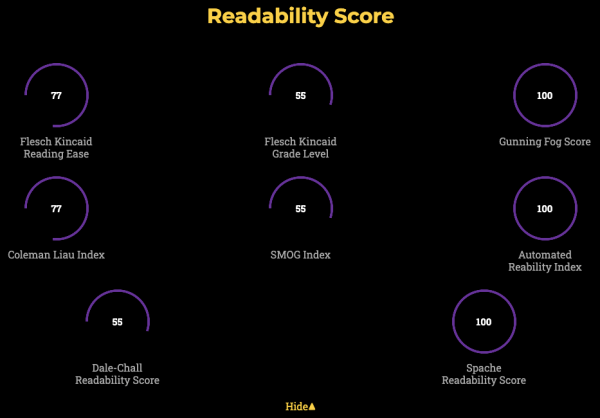

Readability

To add contextual data that may be used to further interpret the submitted document for mLaP scanning, readability scores are appended. These scores are based on the average length of sentences and words in the document. With a score of 60 on Flesch reading-ease, for instance, a document will be easy to read for most people with at least an 8th-grade education (or about a 13-year-old in the U.S. grade levels). The higher the score on Flesch reading-ease, the more readable the document is.

So, the relevant question in relation to plagiarism might be, is this particular document submission consistent with the readability of the submitter's previous submissions? Is the piece's readability related to the readability of its sources (Roig 2001; Sun 2012; Torres & Roig 2005)? One study, for example, found that “incidences of potential plagiarism such as exact copying and near copying appeared to be higher for the low-readability text (33%) than the high-readability text (11.2%)” (Sun 2012: 302).

Did the document submitters simply use paraphrasing tools (like this and that) mechanically without making the texts truly “their own”? Paraphrasing is likely in plagiarism involving less-readable sources. A paraphrasing engine might have been used.

Which Readability Test?

Flesch tests are multi-disciplinary and a good starting point. That's first on display on the Readability Dashboard. Technical writers may refer to Automated Readability Index. For business, you may also check out Gunning Fog Index. Medical and healthcare fields gravitate towards Coleman Liau Index and SMOG Index.

Dale-Chall Readability Formula and Spache Readability Formula are aimed at U.S. elementary schools.

You may choose from over 200 readability formulas, the details of which are beyond the scope of this page. mLaP uses only a few. Pick the ones you're comfortable with or you know best. moodLearning is here to help.

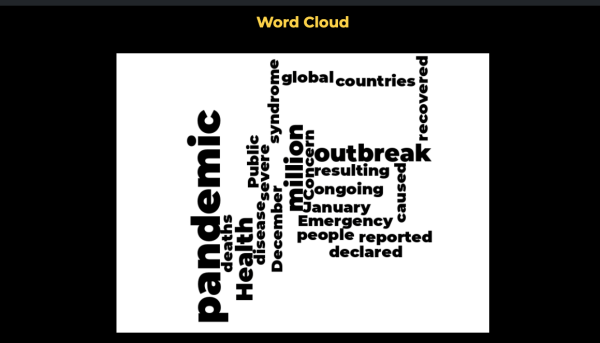

Word Cloud

A word cloud highlights words or concepts prominent in the document. Their font sizes are relative to their prominence vis-a-vis other words or concepts in the text.

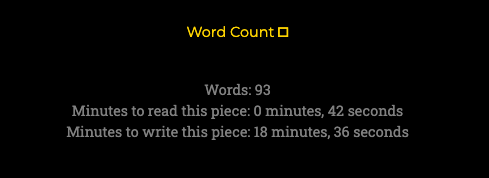

Word Count

Based on the total number of words, one may estimate how long it takes for an average person to read the piece, at the rate of about 130 words per minute. Similarly, the number of minutes or hours it takes for an average writer to write such piece may also be estimated. Has the submission been too fast, too good to be true? It probably is.

Selected References

Roig, M. (2001). Plagiarism and Paraphrasing Criteria of College and University Professors. Ethics & Behavior, 11(3), 307–323. https://doi.org/10.1207/S15327019EB1103_8

Shi, L., Fazel, I., & Kowkabi, N. (2018). Paraphrasing to transform knowledge in advanced graduate student writing. English for Specific Purposes, 51, 31–44. https://doi.org/10.1016/j.esp.2018.03.001

Sun, Y.-C. (2012). Does Text Readability Matter? A Study of Paraphrasing and Plagiarism in English as a Foreign Language Writing Context. Asia-Pacific Education Researcher, 21, 296–306.

Torres, M., & Roig, M. (2005). The Cloze Procedure as a Test of Plagiarism: The Influence of Text Readability. The Journal of Psychology, 139(3), 221–232. https://doi.org/10.3200/JRLP.139.3.221-232